Featuring recordings of Alice Huang (cello) and Sally Atkinson (mezzo soprano), this piece is a meditative dialogue between two voices. While the piece has personal meaning for me, there is no programmatic agenda. The structure is based on Fibonacci numbers, with particular focus on proportion and duration. Within this framework, the performer improvises with visuals and sounds by pressing preprogrammed keys on a laptop. (please note: this is a slow-developing piece, with a meditative quality. This video below is not intended for quick browsing.)

Download score here.

Sound Production Notes

Method 1: Creation of ‘Drone Chords’

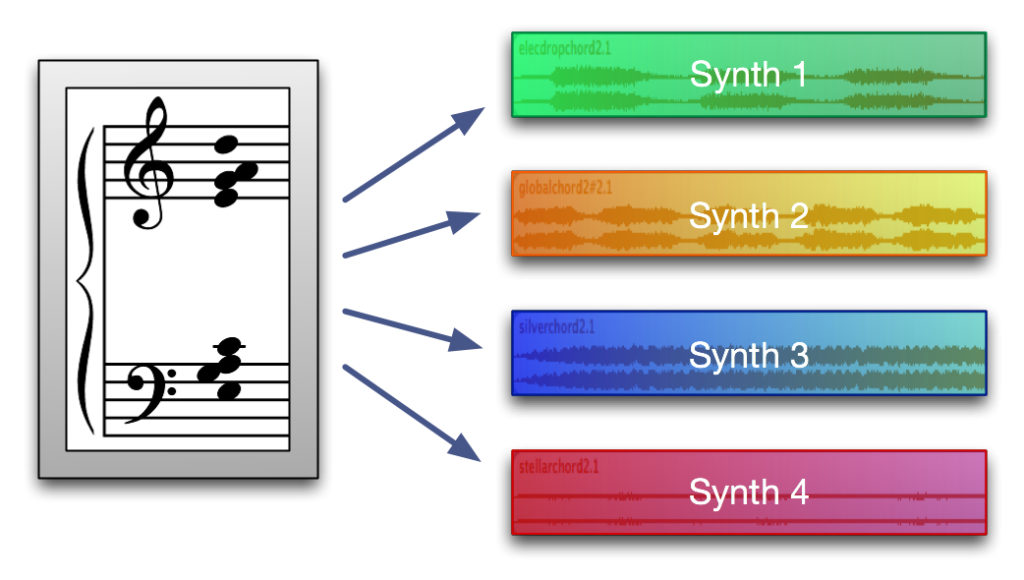

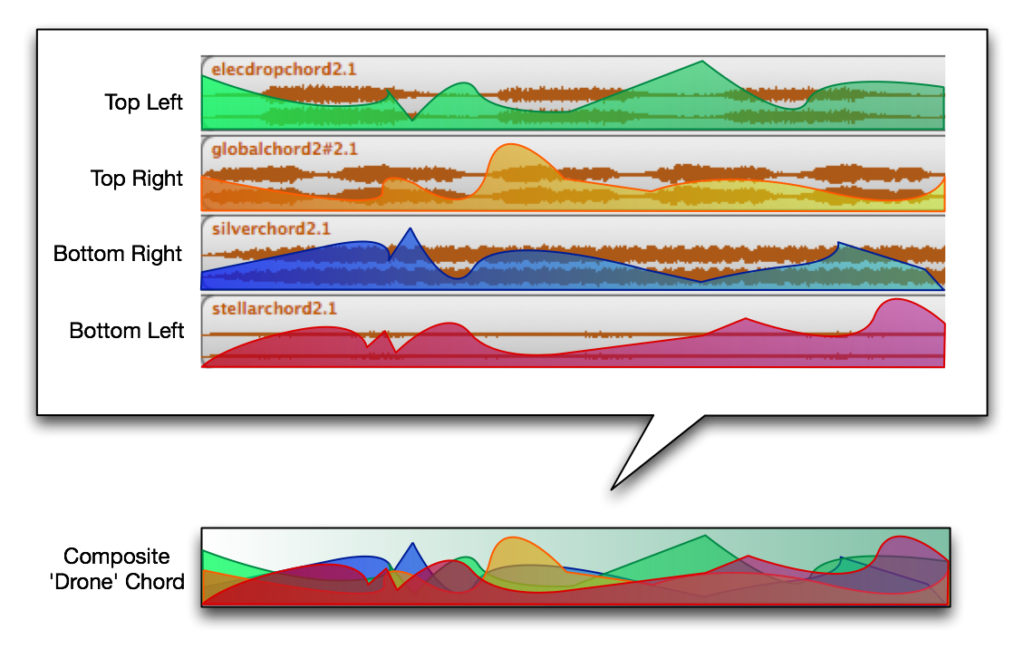

The first stage of preproduction was the creation of sound files to serve as drones for the basic chord progression of the piece. Using recordings of four synth patches each playing the same chords, a recording technique was developed using mousestate values to alter the volume levels of each sound file according to mouse movement.

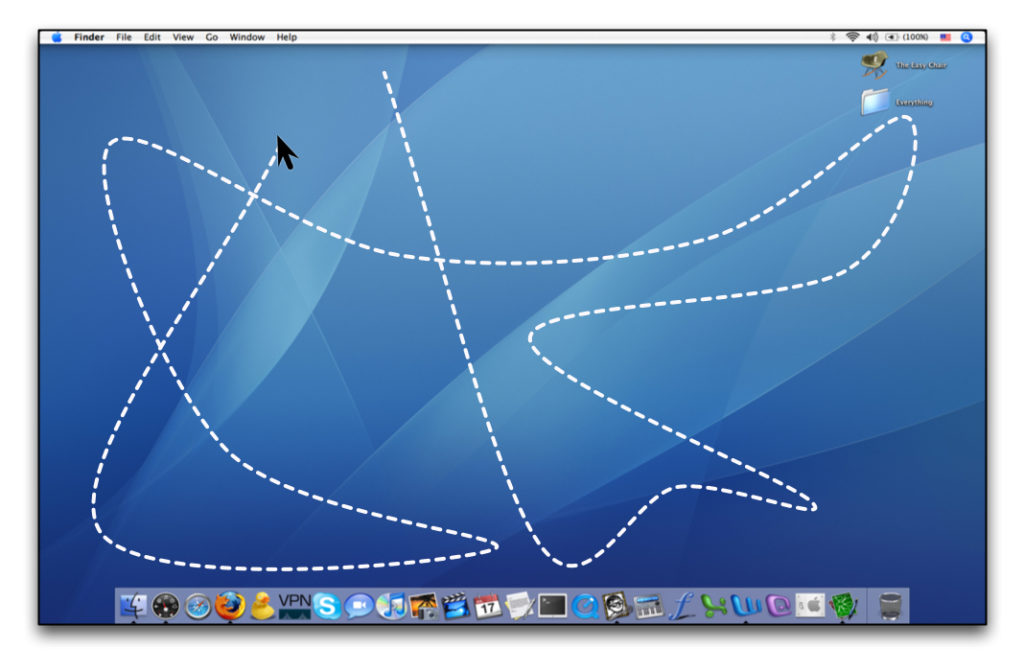

While playing the four sound files simultaneously, volume faders adjust according to the X & Y coordinates of the mouse. The maximum value of each sound file corresponds to one of the four corners on the screen (X & Y values 0,0; 1440, 0; 1440, 960; 0, 960). While moving the mouse, the volume adjustments to each file are recorded in real time, yielding a sound file composite of the original four files.

Method 2: Creation of ‘Chopped’ Sound Files

With the drone files produced, the second stage involved the generation of a secondary set of four sound files, patterned after the rapid volume oscillations scheduled for 6:58 in the piece (at the golden mean). These ‘chopped’ sound files are meant as a textural contrast to the smooth and gradual changes of the drone chords.

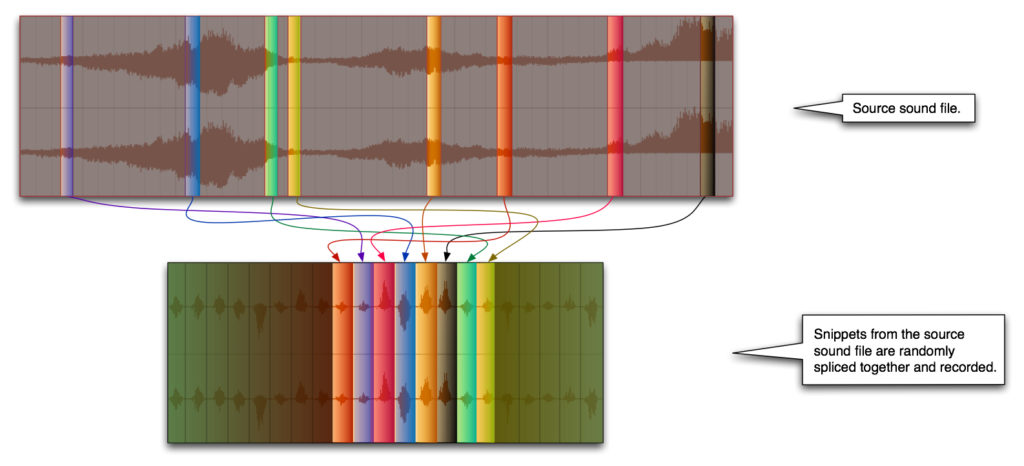

A Max/MSP patch takes a source sound file and randomly splices 100ms snippets together to create a ‘chopped’ effect. Both the duration of the snippets as well as the durational range from within the source sound file may be altered in real time while recording. A recording of this process yields a new sound file, which is then used in performance. Sound Files 2–4, which are turned on or off by the laptop performer, are derived from the preprogrammed drone chords. Sound File 1 is a chopped derivation of a 30 second sound clip of a male voice reading a text.